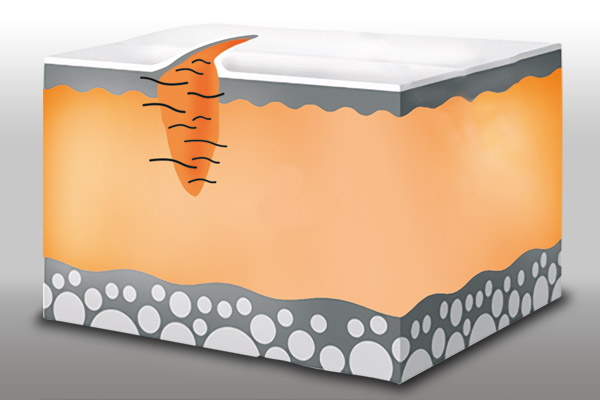

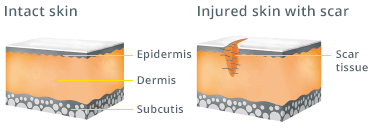

A scar is the inevitable result of a wound that is of a certain depth. Injuries that affect the upper layers of the skin (epidermis), on the other hand, heal without scarring. Thus when an injury is no longer just superficial but goes deeper and penetrates the dermis, a scar forms.

A scar is the inevitable result of a wound that is of a certain depth. Injuries that affect the upper layers of the skin (epidermis), on the other hand, heal without scarring. Thus when an injury is no longer just superficial but goes deeper and penetrates the dermis, a scar forms.

The causes of deeper wounds – and subsequent scar formation – are as varied as life itself. They are often the consequence of cuts, stab wounds or bites. Injuries caused by heat, such as burning or scalding, or chemical burns caused by skin contact with aggressive chemicals, can also result in deep wounds that may be attended by scar formation.

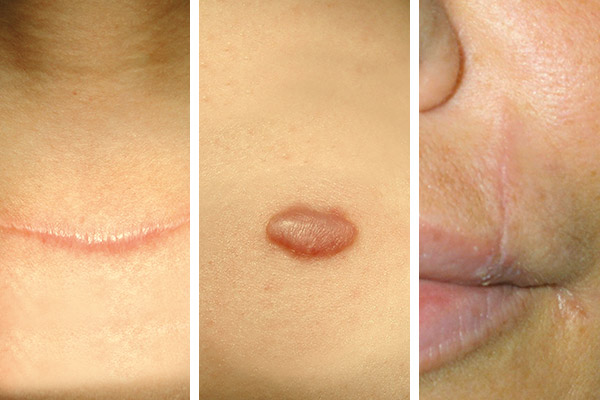

Sooner or later, nearly everyone experiences some kind of scarring. Depending on the extent of the injury, a scar can leave obvious traces. Some of those affected not only consider their scars to be physically obtrusive, but are left feeling less attractive as a consequence too. This is especially the case if the scars are in places that are hard to cover – face, neck or hands.

This makes it all the more important to treat scars early on – for minimum scar visibility and a positive body image.